Computer Architecture Group.

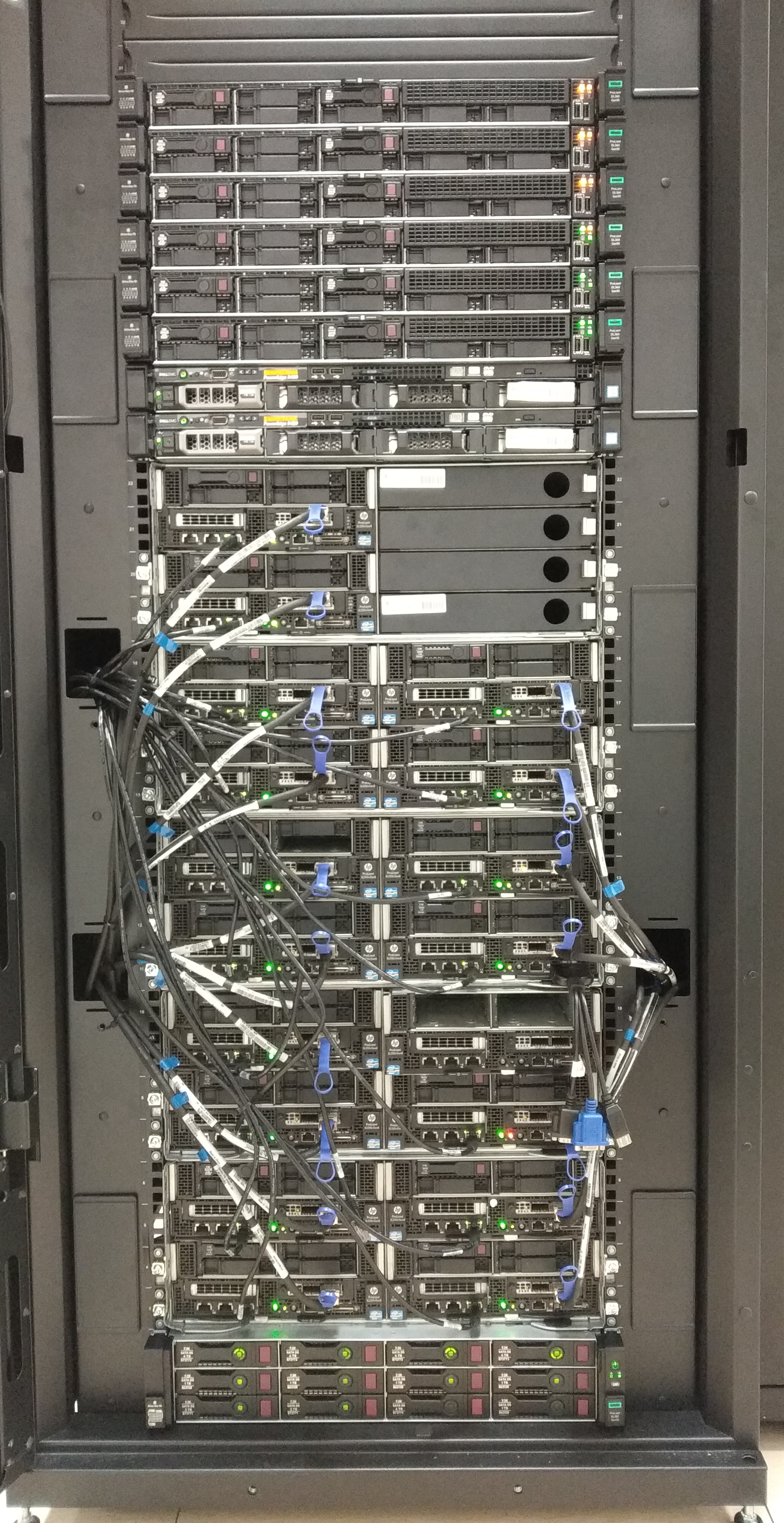

PLUTON CLUSTER

Page last modified on July 31, 2023.

Description

Pluton is a heterogeneous cluster intended for running High Performance Computing applications which has been co-funded by the Ministry of Economy and Competitiveness of Spain and by the EU through the European Regional Development Fund (project UNLC10-1E-728).

The cluster is managed by the Computer Architecture Group and is currently hosted at CITIC, a research centre with the participation of the University of A Coruña (Spain).

Hardware Configuration

Since the initial deployment in June 2013, the cluster has received several small hardware updates supported by newer research projects. As of July 2023, the cluster consists of:

+ A head node (or front-end node), which serves as an access point where users log in to interact with the cluster. The head node of Pluton can be accessed from Internet through ssh at pluton.dec.udc.es. The hardware of this node was upgraded in September 2019, currently providing up to 12 TiB of global NAS-like storage space for users. Moreover, it is interconnected with the computing nodes through Gigabit Ethernet and InfiniBand FDR.

+ 30 computing nodes, where all the computation is actually performed. These nodes provide all the computational resources (CPUs, GPUs, memory, disks) required for running the applications, with an aggregate computing capacity of up to 720 physical cores (1440 logical threads), 4.4 TiB of memory and 17 NVIDIA Tesla GPUs. All computing nodes are interconnected via Gigabit Ethernet and InfiniBand FDR (56 Gbps) networks.